| Summary |

| Visual simulation of large real-world environments is one of the grand challenges of computer graphics. Applications include remote education, virtual heritage, specialist training, electronic commerce, and entertainment. In this project, we present a “Sea of Images,” an image-based approach to providing interactive and photorealistic walkthroughs of complex indoor environments. Our strategy is to obtain a dense sampling of viewpoints in a large static environment with omnidirectional images. We use a motorized cart to capture omnidirectional images every few inches on an eye-height plane throughout an environment. We then compress and store the images in a multiresolution hierarchy suitable for real-time prefetching to produce interactive walkthroughs. Finally, we render novel images for a simulated observer viewpoint using a feature-based warping algorithm. Our system acquires and preprocesses over 15,000 images covering more than 1000 square feet of environment space with the average distance from a random point on the eye-height plane to a captured image being 1.5 inches. The average capture and processing time is 7 hours. We demonstrate photorealistic walkthroughs of real-world environments reproducing specular reflections and occlusion effects while rendering 20-30 frames per second. |

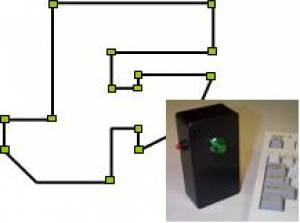

Omnidirectional camera used by our system |

Mobile robot used for acquiring sea of images |

Fiducials and example planning system used to obtain images with a guaranteed pose accuracy |

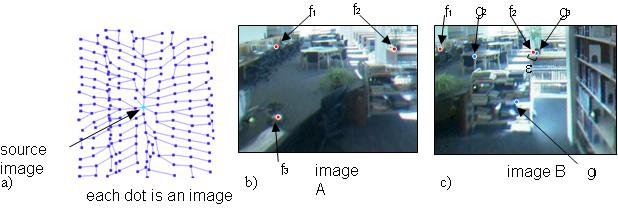

Feature Globalization. (a) Using the edges of a 2D Delaunay triangulation of the image viewpoints, we track outwards from each source image along disjoint paths. (b) For a source image A, we detect features, such as f1,f2, and f3 and add them to the untracked list of features for that image. ( c) Then, we iteratively track all untracked features to the next neighboring image B along each disjoint path. If a successfully tracked feature (such as f1 or f2) is within e of an existing feature in an image B, the pair (fi,gj) is potentially corresponded. |

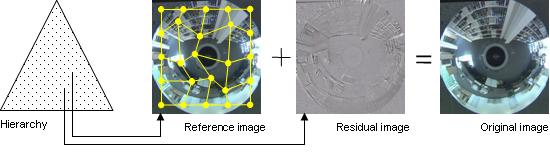

Image Hierarchy and Compression. We use a spatial image hierarchy combined with a compression algorithm. Original images are extracted from the hierarchy by warping reference images and adding in the residual images. |

We show cylindrical projections reconstructed for a novel view of a captured environment using one of three methods. (a) Simply blending together neighboring references images. (b) Using a proxy to warp and blend reference images. ( c) Using our approach that combines feature tracking with the construction and labeling of a correspondence graph, enabling correspondences over a wide range of viewpoints and producing high quality reconstructions without requiring dense depth information or a full 3D reconstruction. |

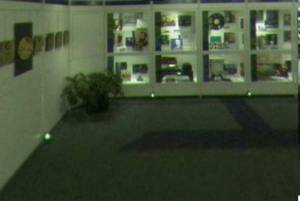

Example Reconstructions: Images left- to-right show reconstructed images with specular highlights moving over the surface of the bronze plaques. |

Example Reconstructions: Images show prefiltered multiresolution reconstructed images for a far-to-near movement of a virtual observer through the environment. |

Captured vs. Reconstructed Comparison. (left) A captured omnidirectional image. (right) A reconstructed omnidirectional image for a novel viewpoint that is as far as possible from the surrounding images. |